Actual unawareness about paediatric resident’s residency program factual clinical reasoning precludes professional retraining directed to solve deficiencies. Script Concordance Test (SCT) evaluates clinical reasoning due to its orientation to usual clinical practice but surprisingly it has not been used in Spain for Paediatric Primary Care clinical reasoning evaluation so far. Due to this we consider it to be of relevance to design a Paediatric Primary Care SCT which meets validity, reliability and accessibility criteria described on bibliography.

MethodsDevelopment, validation and application of an SCT questionnaire for clinical reasoning analysis in Paediatric Primary Care applied on a population of paediatric residents and which includes demographic and employment data in order to study possible relationship between them and achieved scores.

ResultsOur SCT was approved by an experts committee. It met reliability and accessibility criteria and it allowed distinguishing experts from paediatric internal residents. No statistically significant differences were found concerning age, gender, type and duration of the training received in Primary Care, and the completion of a course on that training.

ConclusionsWe developed a SCT that was approved by a Paediatric experts committee, it met reliability and accessibility criteria and it allowed distinguishing clinical reasoning from experts and paediatric internal residents. Except second year residency program, we didn’t objectified relevant differences in residency program year, age, gender, duration and realization of Paediatric Primary Care rotation, and training course realization.

Actualmente desconocemos el razonamiento clínico objetivo de los residentes durante su formación, lo que impide orientar su reciclaje formativo a solventar los déficits detectados. El Script Concordance Test evalúa el razonamiento clínico planteando situaciones clínicas reales, pero aún no ha sido utilizado en España con contenidos propios de Atención Primaria. Por ello consideramos relevante diseñar un Script Concordance Test de Atención Primaria que cumpla los criterios de validez, fiabilidad y aceptabilidad descritos en la bibliografía.

MétodosElaboración, corrección y validación de un Script Concordance Test para evaluar el razonamiento clínico en Atención Primaria de los residentes y que incluya variables socio-laborales para estudiar su posible relación con la puntuación obtenida.

ResultadosNuestro cuestionario fue aprobado por un comité de expertos, alcanzó una fiabilidad y accesibilidad adecuadas, y distinguió a los expertos de los residentes. No se observaron diferencias estadísticamente significativas en función de la edad, género, tipo y duración de la formación recibida en Atención Primaria, y la realización de un curso sobre dicha formación.

ConclusionesSe construyó un Script Concordance Test que obtuvo la aprobación por parte de un comité de expertos, cumplió con los criterios de fiabilidad y accesibilidad, y permitió objetivar diferencias significativas en el razonamiento clínico de los expertos y residentes. Excepto en el 2º año de residencia; no se observaron diferencias estadísticamente significativas respecto al año formativo dentro de la residencia, la edad, el género, la realización y duración de la rotación en Atención Primaria y la realización de un curso sobre dicha formación.

Specialised medical intern-resident (MIR) training programmes have barely changed in the past 30 years1 and need to be revised to adapt them to advances in education and evaluation.

In Spain, training in paediatrics is mostly delivered in the hospital setting2, even though health care organization is based on a primary care (PC) model. Primary care paediatrics (PCP) focuses on the paediatric population at every stage and therefore the rotation of residents through this setting is crucial for their training, regardless of the setting in which their professional career develops in the future3.

It was not until 2006 that the new paediatrics MIR curriculum included a compulsory 3-month rotation in PCP3; however, different paediatrics societies have demanded that training at the PC level be extended to 12 months, in order to balance what is currently a nearly exclusively hospital-based traning4. The implementation of this demand should be associated with an ongoing internal evaluation of the MIR system to identify areas of improvement and adapt training to the current context of PCP1.

The training of physicians is based on acquisition of theoretical knowledge, practical skills and clinical reasoning skills, of which the last two are acquired during graduate education (post-medical baccalaureate degree). Typically, assessment during graduate education has been based exclusively on written exams, including multiple-choice tests, and the subjective appraisal of evaluators5, thus assessing performance out of context in a manner that may not adequately reflect real-world clinical practice.

At present, new methods such as the script concordance test (SCT) allow an adequate and objective evaluation of clinical reasoning, as they provide hypotheses to the subjects of the evaluation and guide the collection of information and decision-making, so that the collected information is used to confirm or reject the hypotheses5, as would be done in real-world clinical practice.

The characteristics of this type of test are based on the assumption that structured knowledge resides in a complex network of neural connections that interrelate different clinical scenarios and allow professionals to solve problems in clinical practice. Thus, the professionals with the greatest expertise would not be those holding the greatest amounts of knowledge, but those with the richest networks of mental associations5.

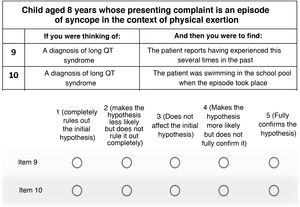

The SCT presents a clinical case (Fig. 1), defines the hypotheses that the professional can consider based on the presenting signs and symptoms of the patient, and lastly provides additional information. When professionals receive this information, they have to assess how it fits in the clinical case originally described and how it affects the proposed hypothesis (confirmation, rejection or no effect) on a 5-point Likert scale5.

Example of a clinical case in a SCT5.

Several studies that have used SCTs have evinced that professional experience, measured merely as the number of years working in the field, is not associated with greater clinical reasoning skills if this experience is not associated with increasing involvement in the type of clinical practice that is under evaluation (for instance, the number of surgeries performed, in the case of gynaecology)6 or ongoing updating of knowledge and skills in the field (continuing education courses, in the case of paediatric cardiology)5. This suggests that clinical reasoning skills may decrease with professional experience if the latter is not associated with these two elements.

In addition, there is evidence that knowledge wanes over time in a so-called forgetting curve, which shows a logarithmic reduction in memory retention as a function of the time elapsed7. At present, many training programmes focus on the need to curb knowledge loss, improving long-term retention through continuing education of clinicians8. Constant updating of knowledge, which is the basis of clinical reasoning, is a way to fight against the forgetting curve, and thus can indirectly have a positive impact on clinical reasoning.

To date, SCTs have been applied in several specialities to assess clinical reasoning, but few studies have been conducted in the field of paediatrics, and none have been conducted in Spain with a focus on contents in the scope of PCP, despite the fact that PC is the setting where 90% of the presenting complaints of paediatric patients are resolved and where nearly 80% of residents that complete their MIR training end up working2.

For this reason, we thought it would be relevant to design a SCT for PCP focusing on meeting the content and criterion-related validity, reliability and acceptability criteria described in the literature. The aim of our study was to assess:

- •

Whether residents have adequate enough clinical reasoning skills in PCP to engage in clinical practice,

- •

Whether the residency year in the MIR programme had an impact in the SCT score,

- •

Whether the years of experience in PCP of experts had an impact in the SCT score,

- •

Whether there is an association between the participation and duration of the rotation in PCP and the SCT score,

- •

Whether there is an association between the sex and age of residents and the SCT score,

- •

Whether participation in a PCP course is sufficient to improve the SCT score.

The lead author developed a SCT comprised of 115 items, adhering to the guidelines for the construction of these tests9 and using frequent reasons for consultation in PCP and the literature on PCP training and education10.

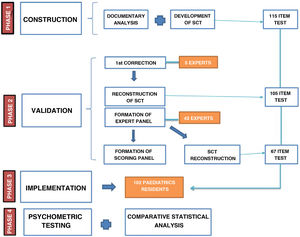

The validation of the questionnaire, a summary of which is presented in Fig. 2, started with the revision by 5 experts in PCP (with 6 or more years of experience), who edited the items and selected for inclusion those approved by 80% of the raters (expert validity criterion11), thus reducing the length of the questionnaire to 105 items.

To form the panel of experts, we submitted the questionnaire online through the Google Form platform (available at https://www.google.es/intl/es/forms/about/) to several paediatrics societies and forums. We received 45 completed questionnaires, thus amply exceeding the response rate in previous studies in the field of paediatrics12,13.

We also collected data on the sex, age and years of experience in PCP. The scoring key was created based on the answers of the panel of experts, eliminating items with low discrimination (extreme variance, unanimous responses or uniform distribution of responses), so that the final questionnaire was reduced to 67 items.

Lastly, the definitive SCT was sent to 1750 residents in paediatrics teaching units in Spain, and we received 102 completed questionnaires (response rate of 5.8%).

The passing score of the questionnaire was determined based on the criterion proposed by Duggan of classifying subjects based on their score transformed to standard deviations (SDs) from the expert mean, setting 4 SDs as the cut-off point14.

The questionnaire collected the following data on respondents: residency year, age, sex, rotation or lack of rotation in PCP, duration of this rotation (<1 month, 1–3 months, >3 months), and participation or lack of participation in a PCP course.

We used the Student t-test to compare 2 means and one-way analysis of variance (ANOVA) to compare more than 2 means. We analysed the association between quantitative variables graphically through linear regression. Statistical significance was defined as a two-tailed P value of 0.05 or less, and all tests were performed with the Stata© software, version 14.2.

The psychometric properties of the questionnaire assessed in the study were its validity, reliability (using the Cronbach alfa15) and acceptability.

ResultsWhen it came to validity, the 67 questions of the questionnaire fulfilled the expert validity criterion (more than 80% of agreement) and allowed confirmation of the hypothesis regarding the level of training: experts scored higher than residents, and therefore the questionnaire could discriminate between them.

As concerns reliability, the Cronbach alfa for medical residents was 0.74.

Five residents (4.9%) failed the test based on the Duggan criterion Duggan (4 SD cutoff). Two experts were excluded from the analysis because their scores were below 50 (49.4 and 46.1).

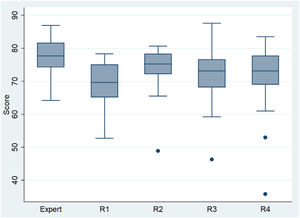

The mean score of the experts (N = 43) was 77.3 (SD, 5.4), greater than the mean of 71.7 (SD, 7.8) in the 102 residents (P < 0.00005). This significant difference compared to the experts was observed in year 1 residents (R1) (P < 0.00005), year 3 residents (R3) (P < 0.001) and year 4 residents (R4) (P < 0.011), but not in year 2 residents (R2) (P = 0.078). Year 2 residents had the highest mean score (Table 1 and Fig. 3), 4.5 points above R1s (P = 0.0287; 95% confidence interval [CI], 0.5–8.5). The differences relative to R3s and R4s were not statistically significant.

Measures of central tendency and dispersion of the scores of the residents in the script concordance test.

| n | Mean | SD | |

|---|---|---|---|

| Year of residency | |||

| R1 | 25 | 69.6 | 6.1 |

| R2 | 21 | 74.1 | 7.2 |

| R3 | 32 | 71.9 | 7.5 |

| R4 | 24 | 71.4 | 10 |

| Sex | |||

| Male | 24 | 70.1 | 9.2 |

| Female | 78 | 72.1 | 7.3 |

| Rotation in PCP | |||

| Yes | 78 | 72.4 | 8.1 |

| No | 24 | 69.3 | 6.3 |

| Duration of rotation | |||

| 1 month | 15 | 73.8 | 8.2 |

| 1-3 months | 56 | 72.5 | 7 |

| >3 months | 7 | 68.8 | 15 |

| PCP course | |||

| Yes | 55 | 71.7 | 9.5 |

| No | 47 | 71.7 | 5.4 |

PCP, primary care paediatrics; SD, standard deviation.

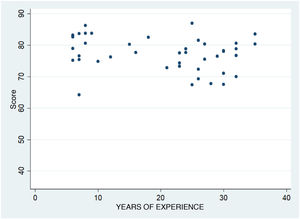

As can be seen in Fig. 4, we found no evidence of an association between the years of experience in PCP of the experts and their score in the SCT (P = 0.1).

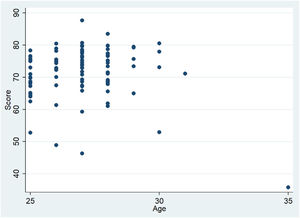

We did not find statistically significant differences in the scores of male vs female residents (P = 0.331). The association between age and the SCT score was also not significant (P = 0.5753) (Fig. 5).

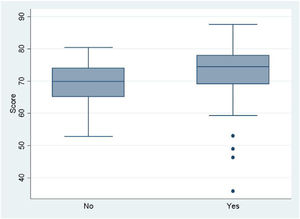

As regards rotations in PCP (Fig. 6), we did not find significantly better scores in residents that did rotations (P = 0.058). We also found no significant differences comparing the 3 possible durations of rotations in PCP (P = 0.4089).

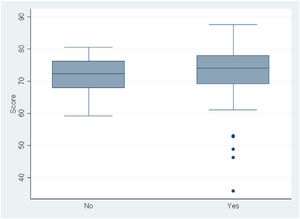

When we compared the mean scores of residents based on whether or not they had taken a course in PCP (Fig. 7), we did not find significantly better scores in those that had taken one (P = 0.995).

DiscussionWe created a SCT questionnaire that comprising 67 items that met validity criteria, as clinical reasoning was better in the experts compared to the overall group of residents, as observed in previous works13,16–21.

As regards reliability, we estimated an internal consistency of 0.74, which would be adequate, given that it exceeded the previously established minimum threshold of 0.722.

We believe that reliability could have improved by increasing the length of the questionnaire, but that this would have had a negative impact on the sample size. In spite of this, the sample of 102 responses allowed us to achieve the validity objectives set for the questionnaire, and has only been surpassed by a study that included 268 responses, although all were from residents in the same year of training23.

The acceptability (construction, scoring, correction, codification and interpretation of the questionnaire) corresponded to what has been described in the literature.

The percentage of residents that failed the test (4.9%) was very similar to the one described by Duggan (3%)14 and most other studies6,24–27, which suggests that we used an adequate threshold to determine whether residents passed the test and correctly identify those with clinical reasoning deficits.

As expected, in association with the level of training of respondents, scores were higher in the group of experts compared to the residents. These differences were consisted with multiple previous studies12–14,28–34, which confirms that the questionnaire was constructed correctly.

However, we did not find an increase in the score based on advancing through the residency years. This was consistent with the findings of Power et al.18 and Steinberg et al.35, whose studies did not find an increase in the score based on increasing year of residency, and contrary to the results of 2 studies that also assessed residents in paediatrics12,13.

Although it would have been reasonable to expect higher scores in year 4 residents, who in theory would be better trained, it was year 2 residents that had the highest scores.

We can think of two factors that may explain the higher scores in second-year residents: first, having completed the first year of residency, which is sets the foundation of the training, and second, that rotations completed in the first year of residency are in settings that require knowledge and skills that are more pertinent to clinical reasoning in PCP, such as the emergency department and neonatal care.

The lack of significant differences in the comparison of R2s, R3s and R4s was also consistent with the studies of Power et al.18 and Steinberg et al.35, also supporting the notion that this tool would not be useful to discriminate between residency levels, but instead to discriminate between fully trained physicians and residents in training.

Contrary to the studies conducted by Rajapreyar et al.31 and Kazour et al.36, in which scores decreased with increasing professional experience or with the lack of continuing education5, we did not find an association between the years of experience in PCP of the experts and the score in the SCT.

We consider that, given the broad scope of the field, it would be unusual for assessed experts to not have taken at least on course on PCP during their careers. Especially given that we recruited the sample of experts from the membership of several paediatrics societies and forums, both of which promote continuing education. This could have been a source of selection bias and explain why the score did not decrease with age, as older participants could have taken more courses and then have more updated training, although we did not explore this variable in our study.

We did not find differences in the SCT score based on sex, and our sample, in which 76.4% of the residents were female, had a sex distribution consistent with the descriptions in the current literature1.

In relation to rotations in PCP, we did not find statistically significant differences in the score. This was consistent with the findings of Peyrony et al.37 However, other authors have found statistically significant differences in SCT scores following rotations36,38,39.

We also compared the scores of experts and residents, between residents by residency year, and by duration of PCP rotation using the Mann-Whitney U test and Kruskal-Wallis test, and had the same results.

In light of the findings of these works, we believe that a variable that may have affected our results is the current 3-month duration of PCP rotations, which may be insufficient to improve clinical reasoning, as longer rotations may be required, as advocated by paediatricians2, to improve outcomes and achieve significant differences, an argument that is supported by previous evidence5,36,38.

The absence of significant differences in the SCT score in favour of those who had taken courses in PC contradicted the findings of several studies in the field of family medicine, paediatrics and pharmacy5,40,41.

One of the possible explanations for this finding could be that we did not take into account the number of continuing education courses that had been taken, but only whether respondents had participated in any. We think that this may have made it difficult to discriminate between clinicians that had been updating their knowledge and skills versus those who had not, which could account for tis result.

The hypothesis that several courses need to be taken to improve clinical reasoning and, therefore, the score in the SCT, reinforces the importance of continuing education, as opposed to the accumulation of years of paediatric clinical practice5,31,42, as we mentioned in the introduction. In addition, the development of continuing education programmes focused on the educational deficiencies of clinicians, would ensure the quality of care delivery and allow meeting the health care needs of the population more efficiently. Although few studies have analysed this aspect, there is evidence of a substantial increase in scores after completion of educational programmes5,40,41.

Chief among the limitations of our study is the inability to cover all the contents of PCP in the SCT, as the excessive length of the questionnaire would have resulted in an even smaller sample size. We also think that the low response rate may be due to a reluctance on the part of some residents to answer questions due to their limited clinical reasoning skills, leading them to refuse participation, which would have limited the sample size but also given rise to a sample that was not uniformly representative of residents, so that its reliability, while adequate, could not be higher. We believe that administration of the questionnaire under supervision and placing a limit on the response time could help achieve a larger sample size.

Thus, we conclude that this SCT could be useful for evaluation and in the future continuing education of residents. In developing this tool, our intent is not to substitute but to complement the current assessment of residents, assisting resident supervisors and the individuals in the institution responsible for developing the MIR programme in assessing residents comprehensively and objectively, so we propose its incorporation by health care administrations in the evaluations conducted at the different stages of paediatrics specialty training.

We believe that if the SCT was administered in person, under supervision and as a compulsory step in residency training, limiting the time given for its administration and optimising its contents and length to increase the sample size and internal consistency, it could sensibly improve paediatric care delivery.

ConclusionsWe built a SCT with 67 items that was approved by a panel of experts, could discriminate clinicians based on their level of training, allowed assessment of whether clinicians had adequate clinical reasoning skills in PCP and whether there were differences between fully trained physicians on staff and residents.

Except for year 2 of the residency, we did not find statistically significant differences based on the year of residency, age, sex, participation in a rotation in PC and its duration and participation in a PCP course.

Having analysed these parameters, we may conclude that this SCT could be a useful tool to assess the adequacy of clinical reasoning skills in PCP in medical residents.

Conflicts of interestThe authors have no conflicts of interest to declare.

Please cite this article as: Iglesias Gómez C, González Sequeros O, Salmerón Martínez D. Evaluación mediante script concordance test del razonamiento clínico de residentes en Atención Primaria. An Pediatr (Barc). 2022;97:87–94.